Sorting in Data Structures and Algorithm

Sorting Algorithm

A sorting algorithm is defined as an algorithm that puts the elements of a list in a certain order, which can be either numerical order, lexicographical order, or any user-defined order.

Efficient sorting algorithms are widely used to optimize the use of other algorithms like search and merge algorithms which require sorted lists to work correctly. There are two types of sorting:

2. Internal sorting which deals with sorting the data stored in the computer’s memory

3. External sorting which deals with sorting the data stored in files. External sorting is applied when there is voluminous data that cannot be stored in the memory.

Sorting : Basic Concepts:

Data records can be sorted based on a property. Such a component or property is called a sort key. A sort key can be defined using two or more sort keys (Multi-key). In such a case, the first key is called the primary sort key, the second is known as the secondary sort key, etc.

Sorting algorithms may require some extra space for comparison and temporary storage of few data elements. These algorithms do not require any extra space and sorting is said to happen in-place, or for example, within the array itself. This is called in-place sorting. For Example :Bubble sort.

In some sorting algorithms, the program requires space which is more than or equal to the elements being sorted. Sorting which uses equal or more space is called not-in-place sorting. For Example : Merge-sort.

A sorting algorithm is said to be adaptive, if it takes advantage of already 'sorted' elements in the list that is to be sorted. That is, while sorting if the source list has some element already sorted, adaptive algorithms will take this into account and will try not to re-order them.

A non-adaptive algorithm is one which does not take into account the elements which are already sorted. They try to force every single element to be re-ordered to confirm their sorted-ness.

• Increasing order: 1, 2, 3, 4, 5

• Decreasing Order : 5, 4, 3, 2, 1

• Non-Increasing Order : 5, 4, 2, 2, 1

• Non-Decreasing Order : 1, 2, 2, 4, 5

Bubble Sort:

In bubble sorting, consecutive adjacent pairs of elements in the array are compared with each other. If the element at the lower index is greater than the element at the higher index, the two elements are interchanged so that the element is placed before the bigger one.

This process will continue till the list of unsorted elements exhausts. This procedure of sorting is called bubble sorting because elements ‘bubble’ to the top of the list.

Note that at the end of the first pass, the largest element in the list will be placed at its proper position (i.e., at the end of the list). If the elements are to be sorted in descending order, then in first pass the smallest element is moved to the highest index of the array.

Bubble Sort : Algorithm

Bubble Sort : Example 1 [1]

• Consider an array A[ ] having following elements: sort using bubble sort A[ ] = {30, 52, 29, 87, 63, 27, 19, 54}.

Bubble Sort : Example 1 [2]Observe that after the end of the second pass, the second largest element is placed at the second highest index of the array. All the other elements are still unsorted.

Bubble Sort : Example 1 [3]

Observe that after the end of the third pass, the third largest element is placed at the third highest index of the array. All the other elements are still unsorted.

Bubble Sort : Example 1 [4]

Observe that after the end of the fourth pass, the fourth largest element is placed at the fourth highest index of the array. All the other elements are still unsorted.

Bubble Sort : Example 1 [5]

Observe that after the end of the sixth pass, the sixth largest element is placed at the sixth largest index of the array. All the other elements are still unsorted.

Complexity of Bubble Sort:

The complexity of any sorting algorithm depends upon the number of comparisons.

In bubble sort, we have seen that there are N–1 passes in total.

• In the first pass, N–1 comparisons are made to place the highest element in its correct position.

• Then, in Pass 2, there are N–2 comparisons and the second highest element is placed in its position.

Therefore, to compute the complexity of bubble sort, we need to calculate the total number of comparisons. It can be given as:

f(n) = (n – 1) + (n – 2) + (n – 3) + ..... + 3 + 2 + 1

f(n) = n (n – 1)/2

f(n) = n2/2 + O(n) = O(n2)

Therefore, the complexity of bubble sort algorithm is O(n2). It means the time required to execute bubble sort is proportional to n2, where n is the total number of elements in the array.

Insertion Sort:

This is an in-place comparison-based sorting algorithm. Here, a sub-list is maintained which is always sorted. For example, the lower part of an array is maintained to be sorted. An element which is to be 'inserted' in this sorted sub-list, has to find its appropriate place and then it has to be inserted there. Hence the name, insertion sort.

Insertion Sort : Technique:

The array of values to be sorted is divided into two sets. One that stores sorted values and another that contains unsorted values. The sorting algorithm will proceed until there are elements in the unsorted set.

Suppose there are n elements in the array. Initially, the element with index 0 (assuming LB = 0) is in the sorted set. Rest of the elements are in the unsorted set. The first element of the unsorted partition has array index 1 (if LB = 0).

During each iteration of the algorithm, the first element in the unsorted set is picked up and inserted into the correct position in the sorted set.

Insertion Sort: Example

Insertion Sort: Algorithm

Merge Sort:

Merge sort is a sorting algorithm that uses the divide, conquer, and combine algorithmic paradigm. Divide means partitioning the n-element array to be sorted into two sub-arrays of n/2 elements. If A is an array containing zero or one element, then it is already sorted.

However, if there are more elements in the array, divide A into two sub-arrays, A1 and A2, each containing about half of the elements of A. Conquer means sorting the two sub-arrays recursively using merge sort.

Combine means merging the two sorted sub-arrays of size n/2 to produce the sorted array of n elements.

• Merge sort algorithm focuses on two main concepts to improve its performance (running time):

1. A smaller list takes fewer steps and thus less time to sort than a large list.

2.As number of steps is relatively less, thus less time is needed to create a sorted list from two sorted lists rather than creating it using two unsorted lists.

Merge Sort : Basic Steps

1. If the array is of length 0 or 1, then it is already sorted.

2. Otherwise, divide the unsorted array into two sub-arrays of about half the size.

a. Use merge sort algorithm recursively to sort each sub-array.

b. Merge the two sub-arrays to form a single sorted list.

Merge Sort : Example

Merge Sort : Example : Merging Part

Merge Sort : Algorithm:

Merge Sort : Complexity:

The running time of merge sort in the average case and the worst case can be given as O(n logn). Although merge sort has an optimal time complexity, it needs an additional space of O(n) for the temporary array TEMP.

Quick Sort : Basic Concept:

Quick Sort is a Divide and Conquer algorithm. It picks an element as pivot and partitions the given array around the picked pivot. There are many different versions of quick Sort that pick pivot in different ways.

• Always pick first element as pivot.

• Always pick last element as pivot

• Pick a random element as pivot.

• Pick median as pivot.

The key process in Quick Sort is partition(). The target of partitions is, given an array and an element x of array as pivot, put x at its correct position in sorted array and put all smaller elements (smaller than x) before x, and put all greater elements (greater than x) after x. All this should be done in linear time.

Quick sort is a widely used sorting algorithm that makes O(n log n) comparisons in the average case to sort an array of n elements. However, in the worst case, it has a quadratic running time given as O(n2).

Basically, the quick sort algorithm is faster than other O(nlog n) algorithms because its efficient implementation can minimize the probability of requiring quadratic time. Quick sort is also known as partition exchange sort.

Quick Sort : Working

1. Select an element pivot from the array elements.

2. Rearrange the elements in the array in such a way that all elements that are less than the pivot appear before the pivot and all elements greater than the pivot element come after it (equal values can go either way).

After such a partitioning, the pivot is placed in its final position. This is called the partition operation.

3. Recursively sort the two sub-arrays thus obtained. (One with sub-list of values smaller than that of the pivot element and the other having higher value elements.) Like merge sort, the base case of the recursion occurs when the array has zero or one element because in that case the array is already sorted.

After each iteration, one element (pivot) is always in its final position. Hence, with every iteration, there is one less element to be sorted in the array. Thus, the main task is to find the pivot element, which will partition the array into two halves.

Quick Sort : Technique

Quick sort works as follows:

1. Set the index of the first element in the array to loc and left variables. Also, set the index of the last element of the array to the right variable. That is, loc = 0, left = 0, and right = n–1 (where n in the number of elements in the array)

2. Start from the element pointed by right and scan the array from right to left, comparing each element on the way with the element pointed by the variable loc. That is, a[loc] should be less than a[right].

a. If that is the case, then simply continue comparing until right becomes equal to loc. Once right = loc, it means the pivot has been placed in its correct position.

b. However, if at any point, we have a[loc] > a[right], then interchange the two values and jump to Step 3.

c. Set loc = right.

3. Start from the element pointed by left and scan the array from left to right, comparing each element on the way with the element pointed by loc. That is, a[loc] should be greater than a[left].

a. If that is the case, then simply continue comparing until left becomes equal to loc. Once left = loc, it means the pivot has been placed in its correct position.

b. However, if at any point, we have a[loc] < a[left], then interchange the two values and jump to Step 2.

c. Set loc = left.

Quick Sort : Example 1[1]

• Sort the given elements using quick sort

Quick Sort : Example 1 [2]

• Now left = loc, so the procedure terminates, as the pivot element (the first element of the array, that is, 27) is placed in its correct position.

• All the elements smaller than 27 are placed before it and those greater than 27 are placed after it. The left sub-array containing 25, 10, 18 and the right sub-array containing 36 and 45 are sorted in the same manner.

Quick Sort : Algorithm

The quick sort algorithm makes use of a function Partition to divide the array into two sub-arrays

Quick Sort : Complexity

In the average case, the running time of quick sort can be given as O(n log n). The partitioning of the array which simply loops over the elements of the array once uses O(n) time.

In the best case, every time we partition the array, we divide the list into two nearly equal pieces. That is, the recursive call processes the sub-array of half the size. At the most, only log n nested calls can be made before we reach a sub-array of size

1. It means the depth of the call tree is O(log n). And because at each level, there can only be O(n), the resultant time is given as O(n log n) time.

Practically, the efficiency of quick sort depends on the element which is chosen as the pivot. Its worst-case efficiency is given as O(n2). The worst case occurs when the array is already sorted (either in ascending or descending order) and the left-most element is chosen as the pivot.

However, many implementations randomly choose the pivot element. The randomized version of the quick sort algorithm always has an algorithmic complexity of O(n log n).

Shell Sort:

Shell sort is considered an improvement over insertion sort as it compares elements separated by a gap of several positions. This enables the element to take bigger steps towards its expected position. In Shell sort, elements are sorted in multiple passes and in each pass, data are taken with smaller and smaller gap sizes.

However, the last step of shell sort is a plain insertion sort. But by the time we reach the last step, the elements are already ‘almost sorted’, and hence it provides good performance.

Shell Sort: Techniques

To visualize the way in which shell sort works, perform the following steps:

• Step 1: Arrange the elements of the array in the form of a table and sort the columns (using insertion sort).

• Step 2: Repeat Step 1, each time with smaller number of longer columns in such a way that at the end, there is only one column of data to be sorted.

Note that we are only visualizing the elements being arranged in a table, the algorithm does its sorting in-place.

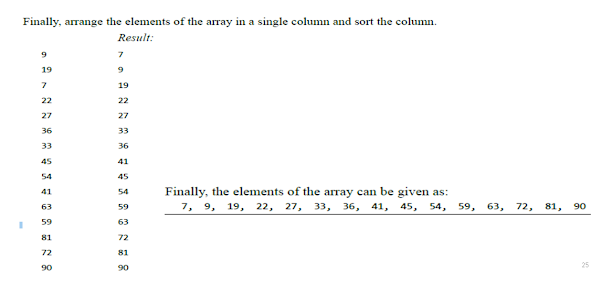

Shell Sort: Example-1 [1]

63, 19, 7, 90, 81, 36, 54, 45, 72, 27, 22, 9, 41, 59, 33

Shell Sort: Example-1 [2]

Shell Sort: Example-1 [3]

• Repeat Step 1 with smaller number of long columns.

Shell Sort: Example-1 [4]

Heap Sort or Tournament Sort:

Given an array ARR with n elements, the heap sort algorithm can be used to sort ARR in two phases:

• In phase 1, build a heap H using the elements of ARR.

• In phase 2, repeatedly delete the root element of the heap formed in phase 1.

In a max heap, we know that the largest value in H is always present at the root node. So in phase 2, when the root element is deleted, we are actually collecting the elements of ARR in decreasing order.

Heap Sort: Algorithm:

Insertion Example : Consider a binary max-heap of the given fig and insert 99 to the heap

Complexity of Heap Sort [1]

• Heap sort uses two heap operations: insertion and root deletion. Each element extracted from the root is placed in the last empty location of the array.

In phase 1, when we build a heap, the number of comparisons to find the right location of the new element in H cannot exceed the depth of H. Since H is a complete tree, its depth cannot exceed m, where m is the number of elements in heap H. Thus, the total number of comparisons g(n) to insert n elements of ARR in H is bounded as: g(n) <= n log n.

Hence, the running time of the first phase of the heap sort algorithm is O(n log n). In phase 2, we have H which is a complete tree with m elements having left and right sub-trees as heaps. Assuming L to be the root of the tree, re-heaping the tree would need 4 comparisons to move L one step down the tree H.

Since the depth of H cannot exceed O(log m), re-heaping the tree will require a maximum of 4 log m comparisons to find the right location of L in H. Since n elements will be deleted from heap H, re-heaping will be done n times. Therefore, the number of comparisons to delete n elements is bounded as:

h(n) <= 4n log n

Hence, the running time of the second phase of the heap sort algorithm is O(n log n). Each phase requires time proportional to O(n log n). Therefore, the running time to sort an array of n elements in the worst case is proportional to O(n log n).

Therefore, we can conclude that heap sort is a simple, fast, and stable sorting algorithm that can be used to sort large sets of data efficiently

Radix Sort or Bucket Sort:

Radix sort is a linear sorting algorithm for integers and uses the concept of sorting names in alphabetical order. When we have a list of sorted names, the radix is 26 (or 26 buckets) because there are 26 letters in the English alphabet. So radix sort is also known as bucket sort.

Observe that words are first sorted according to the first letter of the name. That is, 26 classes are used to arrange the names, where the first class stores the names that begin with A, the second class contains the names with B, and so on.

During the second pass, names are grouped according to the second letter. After the second pass, names are sorted on the first two letters. This process is continued till the nth pass, where n is the length of the name with maximum number of letters.

After every pass, all the names are collected in order of buckets. That is, first pick up the names in the first bucket that contains the names beginning with A. In the second pass, collect the names from the second bucket, and so on.

When radix sort is used on integers, sorting is done on each of the digits in the number. The sorting procedure proceeds by sorting the least significant to the most significant digit. While sorting the numbers, we have ten buckets, each for one digit (0, 1, 2, …, 9) and the number of passes will depend on the length of the number having maximum number of digits.

Bucket Sort : Example 1 [1]

345, 654, 924, 123, 567, 472, 555, 808, 911

After this pass, the numbers are collected bucket by bucket. The new list thus formed is used as an input for the next pass.

Bucket Sort : Example 1 [2]

• In the second pass, the numbers are sorted according to the digit at the tens place

• In the third pass, the numbers are sorted according to the digit at the hundreds place.

The numbers are collected bucket by bucket. The new list thus formed is the final sorted result. After the third pass, the list can be given as123, 345, 472, 555, 567, 654, 808, 911, 924.

Radix Sort or Bucket Sort : Algorithm:

Radix (ARR, N)

Comparison of Sort Algorithm:

External Sorting:

External sorting is a sorting technique that can handle massive amounts of data. It is usually applied when the data being sorted does not fit into the main memory (RAM) and, therefore, a slower memory (usually a magnetic disk or even a magnetic tape) needs to be used.

External sorting algorithms generally fall into two types, distribution sorting, which resembles quicksort, and external merge sort, which resembles merge sort. Typically uses a hybrid sort-merge strategy.

In the sorting phase, chunks of data small enough to fit in main memory are read, sorted, and written out to a temporary file. In the merge phase, the sorted sub-files are combined into a single larger file.

External Sorting: Application

1. Business Application where a transaction file updates a master file for example:

• Updating an inventory database based on sales

• Updating a personnel database based on new hires, promotions, dismissals etc.

2. Database Application

• For performing operations like Projection and Join.

• Projection means selecting a subset of fields and join means joining two files on a common field to create a new file whose fields are the union of the fields of the two files.

• External sorting is also used to remove duplicate records.

A general Lower Bound for Sorting

A lower bound for an algorithm is the worst-case running time of the best possible algorithm for a given problem.

Comparison sorts are limited by a lower bound of O(nlogn) , i.e., on average, comparison sorts cannot be faster than O(nlogn). The "on average" part here is important: there are many algorithms that run in very fast time if the inputted list is already sorted, or has some very particular (and overall unlikely) property.

O(nlogn) There is only one permutation of a list that is sorted, but n! possible lists, so the chances that the input is already sorted is very unlikely, and on average, the list will not be very sorted.

• Integer sorts do not make comparisons, so they are not bounded by O(nlogn). Integer sorts determine for each element K how many elements are less than K . If there are 5 elements that are less than K , then K will be placed in the 6th slot. This information is used to place each element into the correct slot immediately—no need to rearrange list.

FAQ:

1. Define Sorting in Data Structures and Algorithm.

2. What is bubble sort with an example.

3. Write about insertion sort.

4. Define Merge Sort.

Thank You!!!

Deep99Notes